What do you think of when you hear the words virtual reality (VR)? Do you imagine someone wearing a clunky helmet attached to a computer with a thick cable? Do visions of crudely rendered pterodactyls haunt you? Do you think of Neo and Morpheus traipsing about the Matrix? Or do you wince at the term, wishing it would just go away?

If the last applies to you, you're likely a computer scientist or engineer, many of whom now avoid the words virtual reality even while they work on technologies most of us associate with VR. Today, you're more likely to hear someone use the words virtual environment (VE) to refer to what the public knows as virtual reality. We'll use the terms interchangeably in this article.

Advertisement

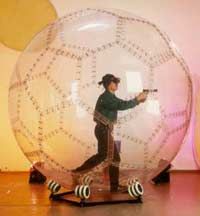

Naming discrepancies aside, the concept remains the same - using computer technology to create a simulated, three-dimensional world that a user can manipulate and explore while feeling as if he were in that world. Scientists, theorists and engineers have designed dozens of devices and applications to achieve this goal. Opinions differ on what exactly constitutes a true VR experience, but in general it should include:

- Three-dimensional images that appear to be life-sized from the perspective of the user

- The ability to track a user's motions, particularly his head and eye movements, and correspondingly adjust the images on the user's display to reflect the change in perspective

In this article, we'll look at the defining characteristics of VR, some of the technology used in VR systems, a few of its applications, some concerns about virtual reality and a brief history of the discipline. In the next section, we'll look at how experts define virtual environments, starting with immersion.

Advertisement