In the aftermath of the Boston Marathon bombings, the manhunt to end all manhunts was underway. There was just one problem -- in spite of their massive advantage in manpower and firepower, authorities couldn't seem to find the perpetrators.

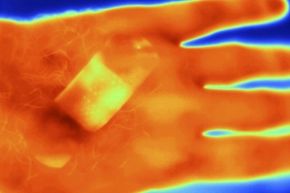

Tipped off by a suspicious homeowner, they finally narrowed their search to a large, covered boat sitting in a driveway. Because the suspect was hidden from sight, they couldn't visually confirm his exact position in the boat, nor could they see whether he was armed. Officers were working in the dark, blind to danger. That's when a thermographic camera helped save the day.

Advertisement

That camera, mounted to a helicopter circling overhead, clearly showed the man lying prone on the floor of the boat. It also revealed that the person was alive and moving. Aided by the visual information from the helicopter, a SWAT team was finally able to approach the boat and apprehend the suspect.

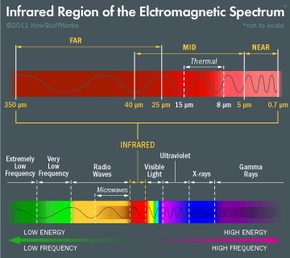

A thermographic camera (or infrared camera) detects infrared light (or heat) invisible to the human eye. That characteristic makes these cameras incredibly useful for all sorts of applications, including security, surveillance and military uses, in which bad guys are tracked in dark, smoky, foggy or dusty environs ... or even when they're hidden behind a boat cover.

Archaeologists deploy infrared cameras on excavation sites. Engineers use them to find structural deficiencies. Doctors and medical technicians can pinpoint and diagnosis problems within the human body. Firefighters peer into the heart of fires. Utility workers detect potential problems on the power grid or find leaks in water or gas lines. Astronomers use infrared technology to explore the depths of space. Scientists use them for a broad range of experimental purposes.

There are different types of thermal imaging devices for all of these tasks, but each camera relies on the same set of principles in order to function. On the next page we'll pull off the blinders on exactly how thermal imaging works.

Advertisement