Avid video game players are familiar with the emotional roller coaster their favorite titles elicit. There's the soaring giddiness of winning a frenetic Super Mario Kart race, and the deflating sadness of getting blasted by an enemy in an epic World of Tanks battle.

But gamers don't generally see that same ecstasy and despondency in their animated, on-screen characters. Typically, those digitally rendered heroes have rough features that only a similarly misshapen, computer-generated mother could love.

Advertisement

That's all about to change. With MotionScan facial animation technology, created by an Australian tech company called Depth Analysis, gamers will be seeing character facial expressions that are truer to life than ever before.

Facial animation technology is a specialized version of motion capture technology, which for years has been used to add all sorts of special animation effects to movies. If you've ever watched behind-the-scenes outtakes of animated movies or films that blend animated characters with live action, such as " The Lord of the Rings," you might recognize part of the motion-capture process. Actors in front of blank blue or green backgrounds, don tight-fitting suits studded with little markers (often resembling golf balls) that cameras recognize.

Those markers help the cameras track and record the actor's movements as he moves in front of the backgrounds, which are called blue screens or green screens. The backgrounds are blank, so that the cameras record the actor's performance without any distracting extraneous objects that would clutter the recording or throw off the movement-tracking process.

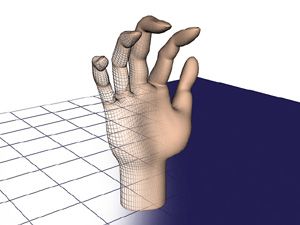

Later, animators can use these recordings to create a digital skeleton or puppet that moves just like the actor. With the help of powerful software, animators overlay this puppet with whatever wacky and imaginative animated character they like. In effect, the animators become puppeteers of sorts, moving the character through scripted scenes. But these animations often lack accurate human body language and facial expressions.

For game designers, this is a major problem because humans are driven by body language. We're able to recognize about 250,000 facial expressions and depend on visual body language for up to 65 percent of our interactions with other people [source: Pease].

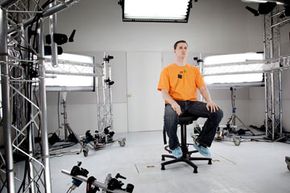

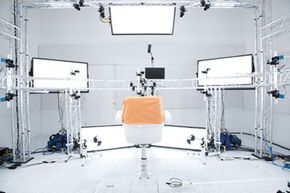

First introduced to the public in early 2010, MotionScan technology aims to create animated characters that convey readable body language. It does so by capturing facial features of performing actors that clearly show the difference between, for instance, disgust and happiness, curiosity and disinterest, and other indicators of a character's emotional state. As you'll see on the next page, this is no easy task.

Advertisement